Culture

Lemonade swears it totally isn't using AI for phrenology

The AI-driven insurance deleted tweets bragging about its spurious fraud detection techniques, then made things somehow even worse.

Big tech companies still love to tout artificial intelligence systems as an innovative solution to endemic human bias and racism... all despite numerous reports and extensive analysis repeatedly refuting its effectiveness. AI-driven insurance claims service, Lemonade, has apparently not seen any of this evidence to the contrary, however.

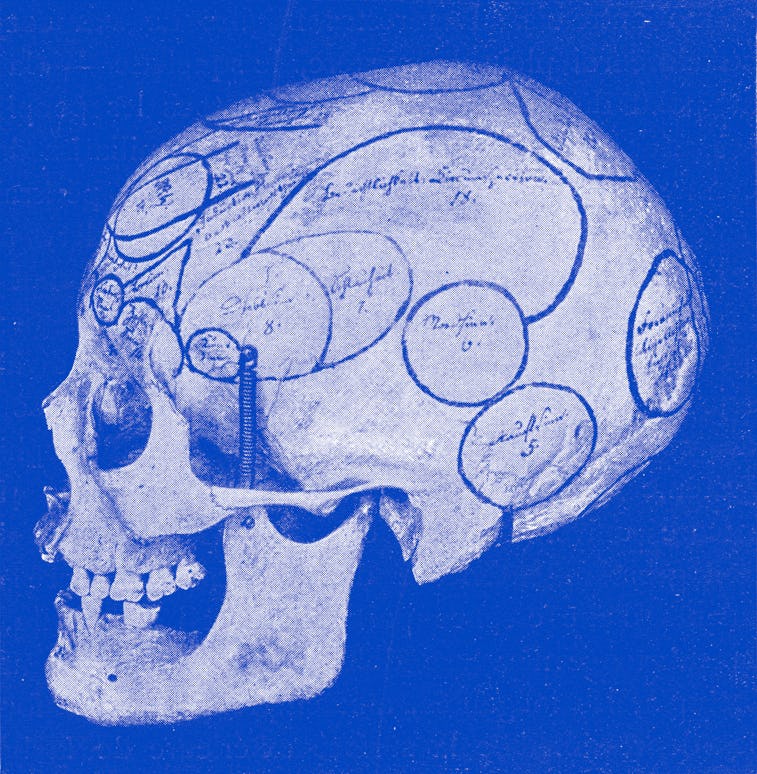

In fact, the company has managed to make a recent social media snafu even worse by trying to walk back boasts of its AI’s supposed ability to detect incriminating “non-verbal cues” and other possible indicators of fraud. In doing so, the insurer hasn’t just enraged its users, it’s directly contradicted its own SEC filings. And it’s made it sound a lot like it’s using AI for the insurance equivalent of phrenology.

It started out with a tweet — As Vice reports, the little whoopsie-daisy stems from a now-deleted Twitter thread on Lemonade’s official account, in which it advertised its app’s video-claims service.

“For example, when a user files a claim, they record a video on their phone and explain what happened. Our AI carefully analyzes these videos for signs of fraud. It can pick up non-verbal cues that traditional insurers can’t, since they don’t use a digital claims service,” read one of the tweets.

Experts quickly pointed out the unreliability of AI-driven physiognomic analysis, and inherent bias issues within artificial intelligence programming generally. AI has a racism problem, so perhaps it shouldn’t be used to assess insurance claims.

How did it end up like this? — Lemonade hastily walked back its assertions with an apologetic blog post today, leading with, “TL;DR: We do not use, and we’re not trying to build, AI that uses physical or personal features to deny claims.”

And yet...

Seriously, Lemonade. Just read Algorithms of Oppression.

“Entire claim through resolution” — As CNN’s Rachel Metz highlights, Lemonade’s attempt to cover its posterior is demonstrably false if we’re judging from the companies own paperwork (which we are): "AI Jim handles the entire claim through resolution in approximately a third of cases, paying the claimant or declining the claim without human intervention (and with zero claims overhead, known as loss adjustment expense, or LAE),” reads Lemonade’s S-1 SEC filing, “AI Jim” referring to the company’s claims bot.

Just for reference, if you’re trying to market your AI insurance bot as “unbiased,” naming it “AI Jim” really doesn’t do much to further your case. Anyway, we’ll go ahead and join the chorus of people online by asking: Which is it, Lemonade? Is it true that you “have never, and will never, let AI auto-reject claims,” or does... um... AI Jim handle claims “through resolution” approximately 33% of the time? It can’t be both.

Promises of change in the months to come — Lemonade assures everyone that “we have had ongoing conversations with regulators across the globe about fairness and AI” in past years, and promises “to look into the ways we use AI today and the ways in which we’ll use it going forward.” Lemonade promises “to share more about these topics in the coming months.” We look forward to hearing all about it on Twitter. And if we ever need to claim from Lemonade, we’re going to wear a big hat in our video so AI Jim can’t measure our head.