Culture

TikTok will now warn users when videos are a little fishy

Sometimes fact-checkers can't verify something as true or false. TikTok's ready to try and tackle the gray middle ground.

TikTok is adding a pop-up notification on videos containing “questionable information,” the company announced today in a blog post. The warning label is an expansion of the platform’s existing misinformation program — this warning, in comparison, is for videos where information hasn’t been fact-checked yet but also seems a little… off.

“Sometimes fact checks are inconclusive or content is not able to be confirmed, especially during unfolding events. In these cases, a video may become ineligible for recommendation into anyone’s For You feed to limit the spread of potentially misleading information,” writes Gina Hernandez, TikTok’s Product Manager of Trust & Safety. “Today, we’re taking that a step further to inform viewers when we identify a video with unsubstantiated content in an effort to reduce sharing.”

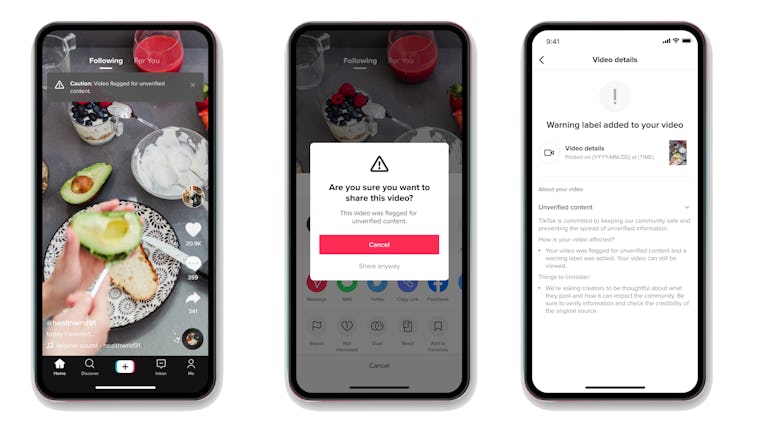

When a video is flagged as potentially misleading, TikTok will now alert both creators and viewers to that fact. The platform will also make sharing these videos more difficult to distribute. That’s much further than most social media companies have gone in limiting the spread of misinformation on their platforms.

Unobtrusive and important — When a video contains potentially misleading information, TikTok will now display a small banner at the top of the video. The banner reads: “Caution: Video flagged for unverified content.” When a user attempts to share a video with this label, a pop-up message asks, “Are you sure you want to share this video?” along with a bright red cancel button.

TikTok will also send a notification to the video’s creator letting them know it’s been flagged as “unverified content” along with some tips for not having videos flagged as such in the future.

The pains of moderation — All social media is difficult to moderate, and that task is made all the more difficult with video content, simply by virtue of the review process being more painstaking when reviewing something other than text. That problem is compounded by the prevalence of misinformation, which requires third-party fact-checking and (ideally) double-checking.

Sometimes information isn’t easily categorizable as truth or fiction; and while that fact-checking distinction is made, the questionable information can continue to spread unchecked. By adding a third category — a maybe this isn’t true category — TikTok is fighting misinformation that might otherwise go unchallenged for lack of clarity.

In its testing, TikTok says, the new messaging decreased shares on videos with questionable content warnings by 24 percent. That’s a substantial reduction of potentially harmful perpetuation.

The feature is rolling out globally in the next few weeks and is available beginning today in the United States and Canada.