Tech

Facebook is teaching robots to really feel things (with their hands)

Facebook AI Research wants its tactile sensing models and hardware to be available to any and all researchers in the field.

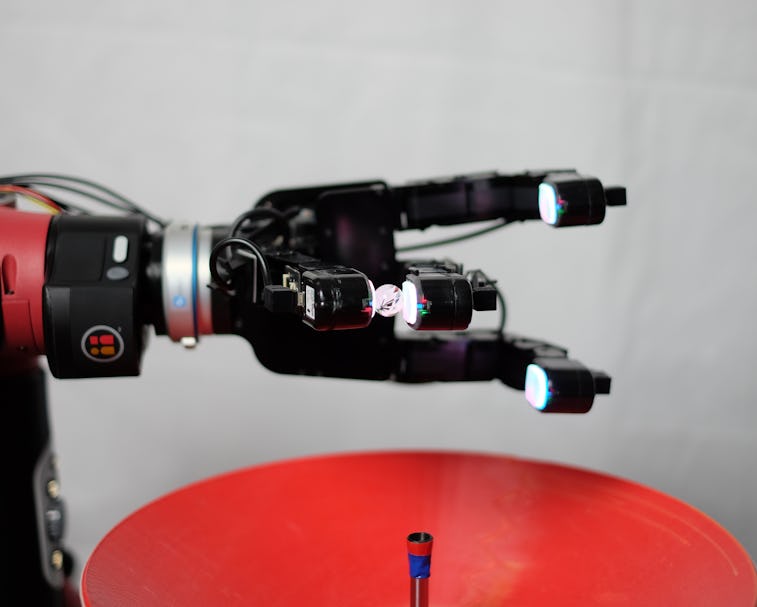

Facebook’s artificial intelligence arm has successfully created sensors that allow robots to “feel” objects they’re touching. Facebook AI Research (FAIR) — which apparently hasn’t received the Meta rebrand yet — unveiled the system, called DIGIT, in a Monday morning blog post.

Touch, FAIR explains, is much more than just a way for humans to express emotion. It’s a key way in which we gather information about the world around us. Sensory clues like temperature, texture, weight, and state of matter are all observations we’d be entirely blind to without our sense of touch.

The field of robotics has yearned to reproduce these touch-enabled observations for a long time, but it’s only now that AI has progressed far enough to begin making it a reality. Facebook’s proposed solution is particularly interesting in that it actually uses vision — a sense robotics has had much success in replicating — to enable touch-based observation.

By some miracle of the supply chain overlords, DIGIT costs all of $15 to produce. And Facebook is actually leaving its research schematics open-source, for once.

Tactile sensing — FAIR’s research is divided into four subsections: hardware, touch processing, datasets, and simulation. Together those tenets allow for more precise tactile sensing and increased adoptability for outside research.

DIGIT is the hardware component here. Facebook first unveiled the ultra-compact sensor array last year. The company says that, compared to commercially available tactile sensors, DIGIT has hundreds of thousands of more contact points.

Advanced simulation is what’s allowed FAIR to fine-tune DIGIT and its software without spending huge amounts of time collecting actual touch data. This simulation system, called TACTO, creates high-resolution touch readings at hundreds of frames per second using machine learning models.

Machine learning has been crucial to FAIR’s research here, but the group is well aware that, for outside researchers, putting those models to use is often incredibly complex. To this end, FAIR created a library called PyTouch that makes it much easier for researchers to train and deploy models.

As for those datasets… well, FAIR says it’s still working to “carefully investigate” them before releasing metrics and benchmarks to the world.

For the masses — Facebook is by no means the first company to pour its resources into understanding robotic touch. What FAIR wants to set its research apart — other than the impressive sensor array and machine learning models — is its ease of access for other researchers.

To this end, FAIR has partnered with GelSight, a group started at MIT that created one of the first vision-based tactile sensors, to manufacture DIGIT for the masses. FAIR hopes progress in the field can be more efficient, and one of the largest barriers to entry is simply sourcing and affording the hardware.

PyTouch, FAIR’s machine learning library, works toward the same end goal. FAIR hopes its research can be useful to “empower a broader research community to leverage touch in their applications.” That openness stands in stark contrast to Facebook HQ’s usual stance on research, which is to only be forthcoming when threatened with legal action.

FAIR notes that there are still many hurdles to breach before tactile sensing is primed for the mainstream. But the promise of a robot that can handle your eggs without breaking them is on the horizon.