Tech

Google AI no longer uses gender binary tags on images of people

No more misgendering or gender bias from Google AI.

Google’s image-labeling AI tool will no longer label pictures with gender tags like “man” and “woman,” according to an email seen by Business Insider. In the email, Google cites its ethical rules on AI as the basis for the change.

This is a progressive move by Google — and one that will hopefully set a precedent for the rest of the AI industry.

Dismantling the binary — Ethics aside, Google also says it’s made this change because it isn’t possible to infer gender from someone’s appearance. Google is correct on that count. AI’s tendency toward a gender binary might be helpful in blunt categorization, but there are also many gender identities that fall on the spectrum outside of “man” and “woman.”

Though Google doesn’t go as far as saying so in its policies, removing the gender binary from its AI actively makes the software more inclusive of transgender and non-binary people. It’s a move that the rest of the tech industry would do well to emulate.

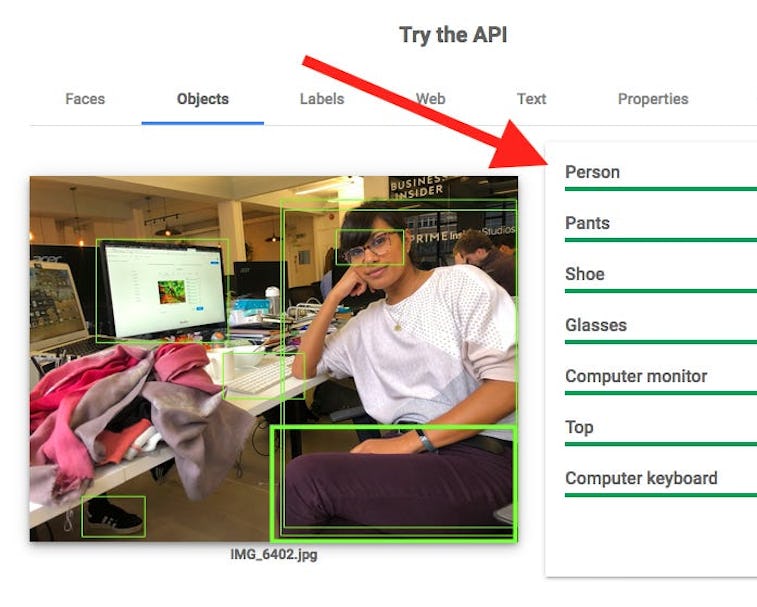

The change is in effect — Business Insider tested Google’s Cloud Vision API — which can be used to auto-label photos — and found the change had already gone into effect. Rather than tagging a test photo as “man” or “woman,” the API simply labels it as “person.”

Gender bias in AI is a real problem — Artificial intelligence might seem impartial, but many algorithms have proven to have biases against gender, age, or race. A recent study by the National Institute of Standards and Technology displayed just how biased AI can be.

Google is setting the best kind of example by removing gender completely from its AI. Now we wait for other companies to follow suit.