DishBrain

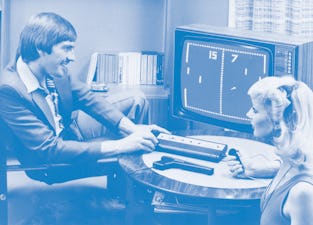

This petri dish of brain cells learned 'Pong' way faster than AI

5

Minutes needed to teach a neuron network to play 'Pong'

Just about anyone can play Pong, the classic table tennis arcade game. No, seriously, even monkeys can play it, with the right equipment. And artificial intelligence can play Pong, obviously — video game AI can complete much more complex tasks than following a ball of pixels across the screen.

Teaching AI to play Pong from scratch usually takes about 90 minutes. An impressive feat, in an hour and a half. But apparently a pile of brain cells (yes, literally a pile of brain cells) can be taught how to play Pong in just five minutes. For those keeping track at home, that’s about 5 percent of the time AI takes to learn the game.

If the very idea of “teaching” dislocated brain cells to do anything at all seems foreign to you, well, join the club. As Australia-based Cortical Labs tells NewScientist, the neurons are being studied in Petri dishes to observe how networks form and interact with stimuli. The dishes themselves conduct electricity through the brain cell networks; these signals can then be used to change networks’ behaviors. They become living computer chips, in a way.

Becoming the game — As Brett Kagan, chief scientific officer of Cortical Labs explains, these neurons are more than just a dumping of brain cells on a dish. They’re a network capable of learning and completing tasks.

“We often refer to them as living in the Matrix,” Kagan says. “When they are in the game, they believe they are the paddle.”

Kagan and his team are able to educate the neuron network (which, by the way, they refer to as “DishBrain”) via electric stimulation. The electric signals tell the DishBrain where the ball is and, in just five minutes’ time, the DishBrain figures out how to move its “paddle” to hit the ball. The network of cells becomes the paddle.

AI’s got a ways to go — Not to disparage anyone’s experiments here, but AI is looking like small fries in comparison to DishBrain right about now. Pong is an easy game to learn, but five minutes’ learning time is really impressive. Sure, AI can drive delivery trucks around with no problem — but imagine how much more quickly DishBrain might be able to learn the same task, given the right inputs.

DishBrain is just a lab experiment right now, but the team at Cortical Labs sees this success as a step toward creating fully synthetic brains. Those brains would theoretically be able to outsmart even the best AI on the market. Which is both thrilling and highly concerning, seeing as AI is already wicked smart. A full-fledged DishBrain might just take over the world.