Red Flags

Twitter passes the buck, will crowdsource misinformation moderation

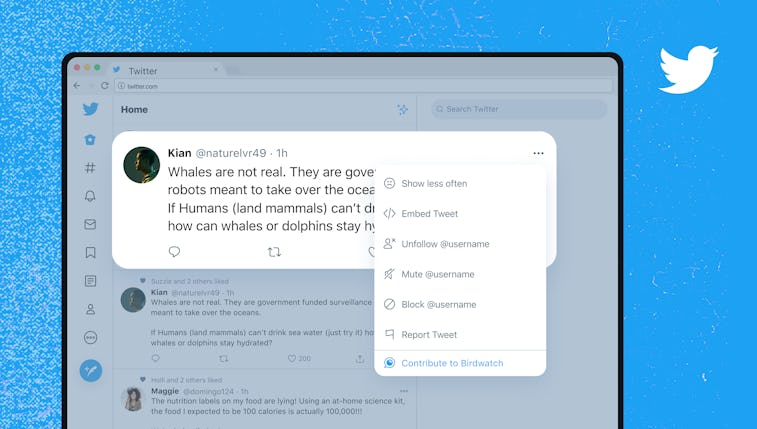

Birdwatch is a new, community-driven tool meant to catch more misinformation and take some heat off of Twitter itself.

On Monday, Twitter revealed a new tool that could, in many ways, serve as a shield. Birdwatch allows Twitter users to flag misinformation, provide context, and measure the level of harm the tweet poses.

Eventually, Twitter hopes to roll out the community moderation tool to everyone, but for now, only U.S. users are eligible to sign up. It’s easy to see why Twitter wants to move in this direction, but in Birdwatch’s nascent state, it seems unlikely that it will help anyone other than the company.

What is Birdwatch? — Birdwatch allows notes to be applied to any tweet. Those accepted into the pilot program can navigate multiple-choice questions about the flagged tweet as well as provide a written explanation of why the information lacks veracity and link to sources. This process is meant to move away from the limitations of true or false flagging and catch nuanced misinformation that may be partially based in fact.

Notes are ranked for helpfulness while also taking perspective diversity into account to avoid majority rule-only notes. For now, notes will only live on the Birdwatch site and they won’t affect tweets’ visibility on Twitter. In a push for transparency, this data is completely downloadable and will publicly display notes, even for those on private accounts — a good way to catch those who hide behind protected tweets after a negative viral moment, but it’s also a privacy concern for the average person.

Just let them do it — Twitter’s war against misinformation is often more aggressive than that of its peers, but it’s still lacking in many respects. Birdwatch feels like a tool designed to lift some of the burdens of fact-checking from the company.

Most notably, Birdwatch is free labor and it worsens the optics of this by having early adopters apply to the pilot program. The criteria are mainly geared toward keeping bots out of the testing phase, but there’s also a provision excluding those who have recently been thrown in Twitter jail. There’s no shortage of people, often people of color and/or sex workers, who unjustly receive slaps on the wrist from Twitter, so many in this pool will not be able to bring their perspectives to this pilot phase. Eventually, Twitter wants to implement a reputation system based on one’s note track record, but with many missing from the building stage of this tool, respectability may supplant expertise.

There’s also the issue of coordinated bombardments of notes which have the potential to actually boost misleading information or take harassment on the platform to new heights. Twitter is aware of most of these issues, but it seems like testers will mainly be guinea pigs for malice who are reliant on a self-reporting system — you know, the tool that has allowed Nazis and Gamergaters (not mutually exclusive) to roam free on the site for years.

But why rely on the community to moderate? — It’s clear that Twitter can’t keep up with harmfully misleading tweets, and it also wants to get out of conservatives’ and white nationalists’ crosshairs. These groups have long maintained that Big Social Media is biased against them, so the creation of a community-driven moderation tool could provide a little cover.

That being said, Twitter currently has no plans to shutter its in-house misinformation moderation, but Birdwatch could take some of the flak for especially controversial tweets the company doesn’t want to weigh in on.

It also doesn’t hurt that Section 230 reform is an increasingly bipartisan issue. Offering an additional line of defense to legislators is a solid, good-faith move that Twitter’s finally ready to deal with white nationalist propaganda... or at least, it's ready for its users to deal with it.